Here’s how HSM can deliver on the promises of AI, but in more energy efficient and open way.

So your company helps people to organize information and understand things? Why aren’t you an AI company?

I get this question a lot, especially in the last couple of years, when I talk about HSM.

One way to answer would be to say that, by some definitions of AI, we are. But we’re definitely not doing machine learning or large language models (which is what people really mean by AI these days); instead we’re building an information system that will facilitate what we do today with AI but while better protecting the environment and keeping our information open.

HSM does this by expressing the structure of information via a public, interoperable syntax. We the people can explore this information for our own purposes, while AI can learn from it. We can thus reduce the power consumption required because it can learn from information that is actually structured like information and ideas, rather than, say, books and the Web.

Why Is HSM Working on Hypertext and Not LLMs?

I’ve been interested in AI since watching Space Odyssey as a child, then much more after reading Polish writer Stanislaw Lem’s exploration of the implementation and ramifications of AI in his short story “Golem XIV.”

As mentioned above, AI means lot more than LLMs. Indeed, my choice of programming language—Lisp—is closely associated with an older vision of AI: “Expert Systems,” which hoped to store human knowledge in an abstract form, and let a user interact with said knowledge as though with an expert.

This vision, though today out of favor, has the advantage of storing information in a form that we can access and explore, unlike an LLM model. Oddly enough, this type of AI is actually very similar to the more complete vision of hypertext, i.e. a growing network of interconnected nodes, to express human knowledge.

I think that building good hypertext systems is a more pressing need than building AI. Why? The computer screen is the retina of the mind’s eye. However useful and important you think AI is, you will access it through a hypertext (or hypermedia) system. All the limitations and distortions of the hypertext system we use to access the things we create will come to us again, with renewed vigor. Given the social and environmental problems caused by how we work with information today (obliviousness as to where ideas come from and their overall structure) using AI at scale will be catastrophic.

So How Can HSM Help?

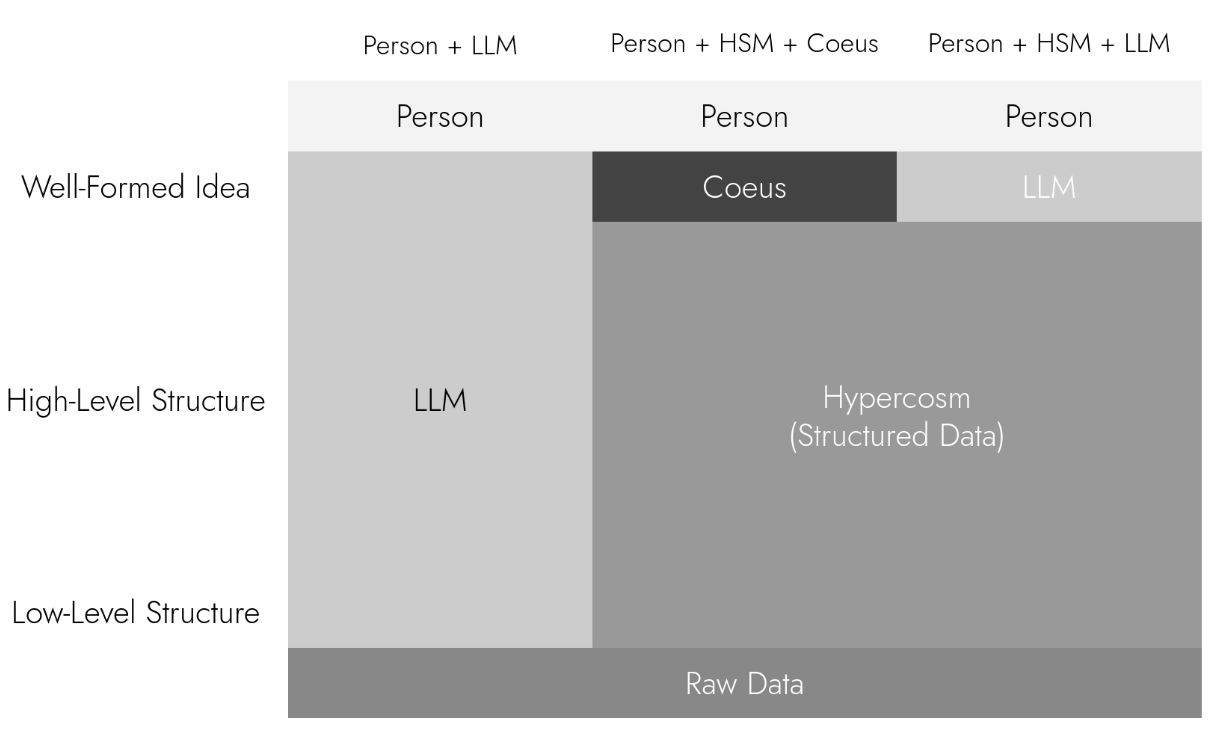

In short, HSM has the power to solve the energy consumption problem of LLMs by helping current AI models to do their work more directly or helping people to get the answers they need without using AI at all. It can do this while opening the black box of AI and instead storing the information structure in an open, interoperable repository.

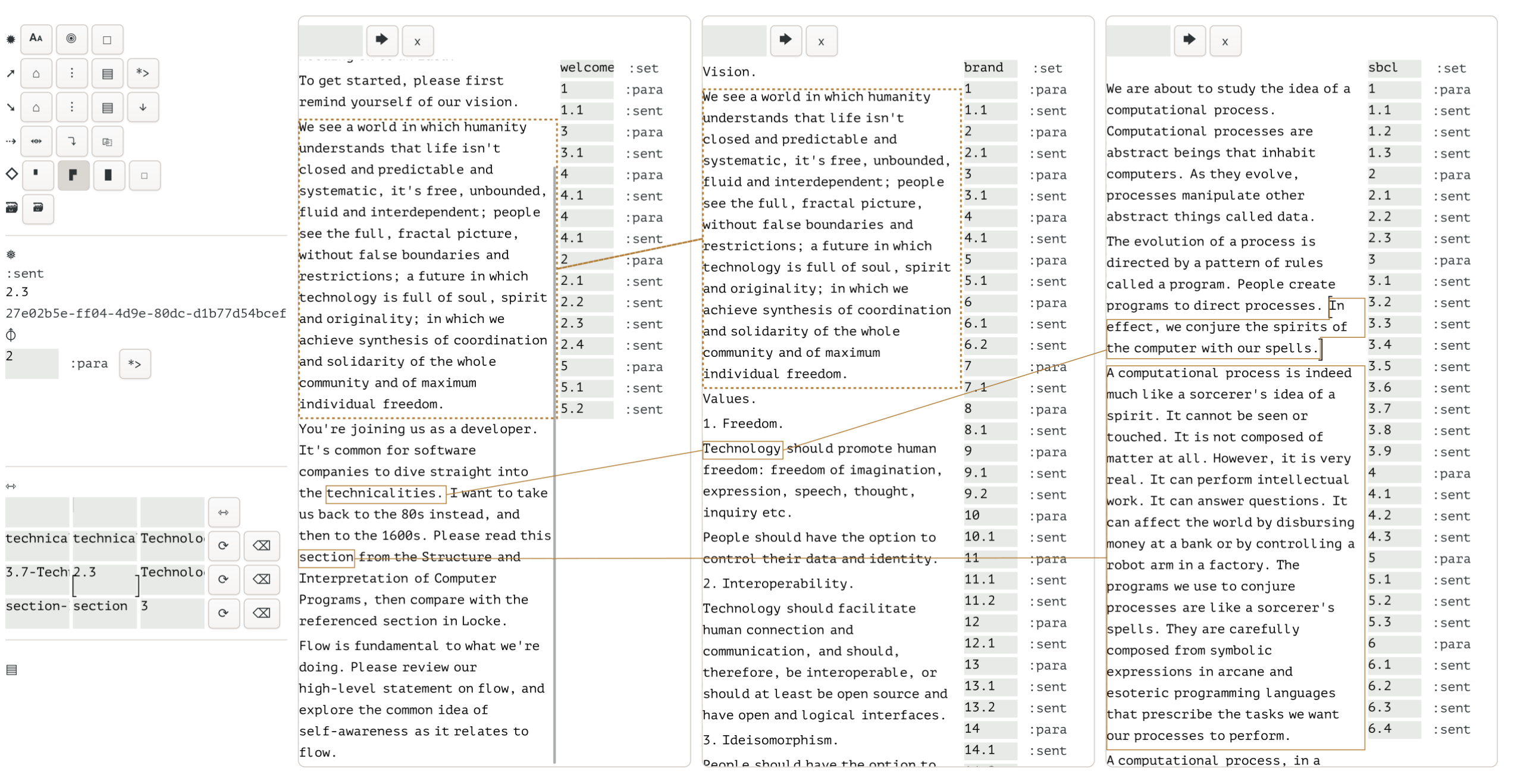

Here’s an overview of how HSM and its frontend, Coeus, can store and visualize information structure. HSM allows you to create and store not just words and paragraphs, but any type of relationship, such as links, sets, trees, graphs, hypergraphs, etc. All of the above are stored as first-class things: a link, say, is uniquely identified and stored independently of the things that it links. Out term for this repository of information structure, owned and controlled by the users, the Hypercosm.

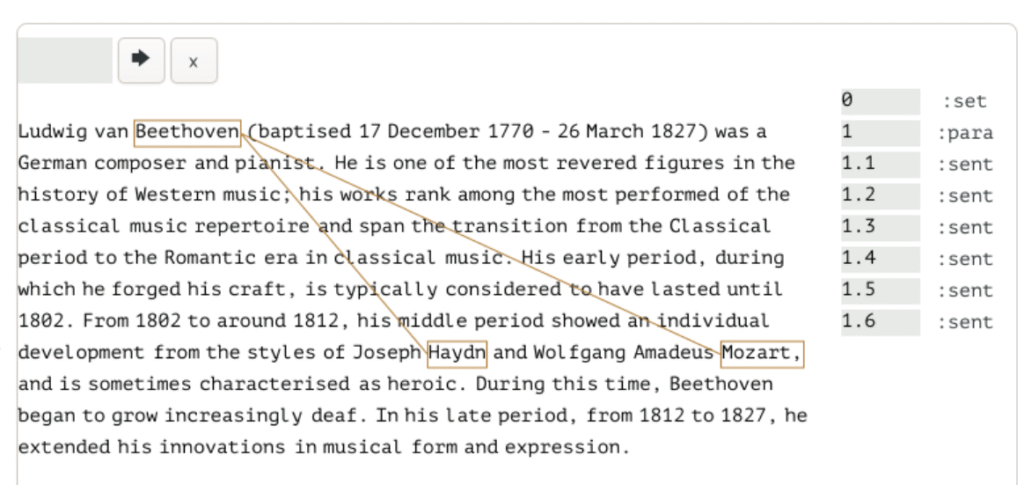

See an example in the screenshot below. There are two links, one between the word “Beethoven” and the word “Haydn” and another between Beethoven and Mozart. This is stored in a relationship-first way, meaning the links are uniquely identified, and accessible in themselves: I or some system can query and study all the links, say, pertaining to composers without reference (or even knowing about) the underlying articles.

You could say that HSM makes it possible to store structured data about the actual shape of human knowledge.

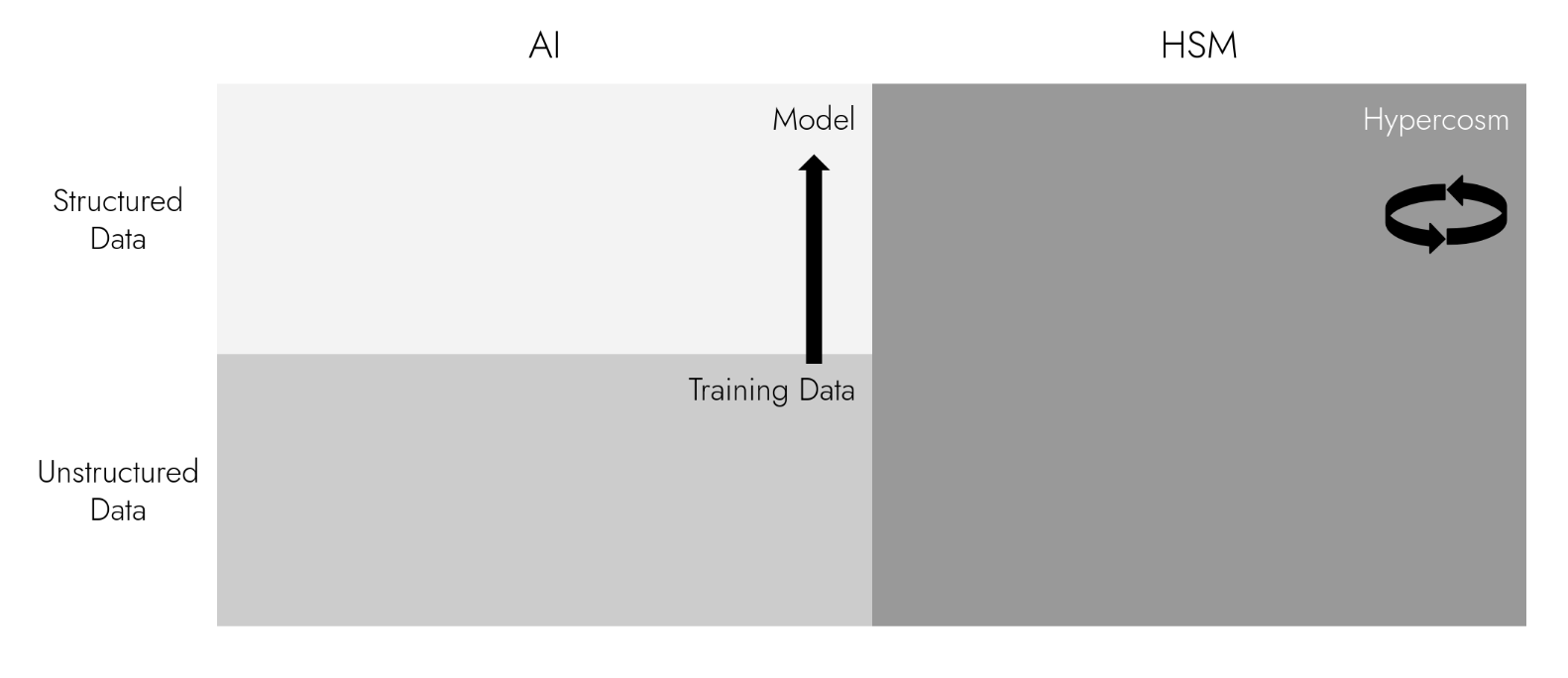

AI, or perhaps the LLMs that people compare with HSM, works by organizing and integrating information to present a summary or synthesis to a user, in natural language. There are two problems with this:

- Such AIs are organizing data that lacks the explicit structure shown above; instead AIs have to infer relationships by textual similarities and references, which is partly why it takes such astonishing amounts of energy to build AI models.

- AI models being black boxes. Whatever the AI learns is locked inside, inaccessible to the community. For instance, ChatGPT will explain to you that it can define and interpret legal terms by cross-referencing legal dictionaries; it cannot tell you which particular cross-references it made.

How HSM Can Help 1: a Way to Understand Information Structure without AI

HSM will actually deliver on the promise of the word “Web”: a growing set of relationships and interconnections organizing information. People will be able to store, query, filter, organize and visualize these relationships. Most of them will be public, some will be private, some available for a fee—but they will always be based on the same lingua franca syntax and will thus be interoperable.

See the screenshot below, in which Coeus visualizes the relationships between pieces of information, without the vagaries and bias of natural language:

Using HSM instead of an LLM will sometimes be faster, sometimes slower. But it will likely always be more energy efficient, and it will always avoid the vagaries of natural language.

How HSM Can Help 2: a Better Information Foundation, for More Efficient Training

Indeed, people may still wish to have things told to them in natural language.* AI models will thus be able to learn from information already structured to express meaning and relationships explicitly, and thus at a far lower energy (and environmental cost). AI can stop wasting time structuring the unstructured.

So, in summary, HSM is not an AI company in the modern sense of the word. It is however building technology that stands to reduce one of the most concerning aspects of AI: its immense power consumption. It can give this to us, while helping humanity to keep its information and its understanding of the world open.

* Natural language has many issues, among them ambiguity and the certainty that it will entrench existing cultural barriers and inequalities.